Hey there! If you’ve ever wondered how your phone instantly knows who you are, how a self‑driving car spots a pedestrian, or why a photo‑editing app magically stitches together a panorama, the secret sauce lies in visual features recognition. In a nutshell, it’s the technology that lets machines pick out the most telling bits of an image—edges, corners, textures, and patterns—and use them to understand what they’re looking at, almost in the blink of an eye.

Why should you care? Because this capability is reshaping everything from healthcare diagnostics to social media filters, and it comes with a mix of exciting possibilities and important responsibilities. Let’s dive in together, keep it friendly, and explore both the wonder and the caution that come with this fast‑growing field.

Fast Answer Overview

Visual features recognition works by first locating “interest points” in an image—places that stand out because of contrast, shape, or texture. Then it creates a compact numeric description (a descriptor) of each point. Finally, the machine compares these descriptors across images to decide if they match. Think of it as a sophisticated game of “spot the difference,” where the computer is the super‑sharp‑eyed player.

That’s the high‑level answer. Now, let’s look at the building blocks that make it all happen.

Core Visual Features

Edges & Contours

Edges are the most basic visual cues—where one color or brightness abruptly changes to another. They’re like the outlines you draw when sketching a cartoon. Classic algorithms such as Canny and Sobel detect these gradients and turn them into binary maps that highlight the shape of objects. In autonomous driving, edge detection is what lets the car see lane markings on the road.

Corners & Interest Points

Corners are points where two edges meet, making them highly repeatable even when an object shifts or rotates. Algorithms like Harris, Shi‑Tomasi, and FAST are the workhorses behind many real‑time applications because they’re fast and reliable. Below is a quick comparison that many engineers find handy:

| Detector | Speed (fps) | Repeatability | Typical Use‑Case |

|---|---|---|---|

| Harris | 30 | High | Precision mapping |

| Shi‑Tomasi | 45 | Very High | Structure‑from‑motion |

| FAST | 200 | Medium | Mobile AR |

Blobs & Texture Patches

Blobs are regions of similar intensity or color that stand out from their surroundings—think of a cluster of dark pixels on a bright sky. Detectors based on Difference‑of‑Gaussians (DoG) or Laplacian of Gaussian (LoG) excel at finding these. In medical imaging, blob detection helps radiologists locate suspicious lesions that might indicate cancer.

Keypoints & Descriptors

Once an interest point is found, a descriptor translates its visual appearance into a vector of numbers. SIFT (Scale‑Invariant Feature Transform) offers a rich, 128‑dimensional description that tolerates scale and rotation changes, while ORB (Oriented FAST and Rotated BRIEF) provides a faster, binary alternative ideal for smartphones.

Here’s a tiny Python snippet (using OpenCV) that finds and draws ORB keypoints:

import cv2img = cv2.imread('photo.jpg', 0)orb = cv2.ORB_create()kp, des = orb.detectAndCompute(img, None)img_kp = cv2.drawKeypoints(img, kp, None, color=(0,255,0))cv2.imwrite('orb_keypoints.jpg', img_kp)This code runs in a fraction of a second on a typical laptop, proving that powerful visual analysis can be lightweight.

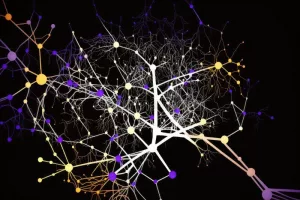

Deep‑Learned Features

Modern convolutional neural networks (CNNs) automatically learn hierarchical features—edges in the first layers, textures in the middle, and object parts in the deeper layers. Vision Transformers (ViT) push this further by treating image patches as “words” in a language model. While these approaches often outperform handcrafted methods, they also demand massive data and careful bias mitigation.

Neuroscience Visual Parallel

What’s fascinating is that the brain does something remarkably similar. Let’s walk through a few neural players that echo the algorithms we just discussed.

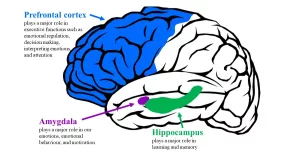

Amygdala Neurons

The amygdala reacts instantly to salient visual cues—especially those that signal potential danger, like an angry face. Its rapid firing mirrors how edge detectors flag abrupt intensity changes. Curious to learn more? Check out this deep dive on amygdala neurons.

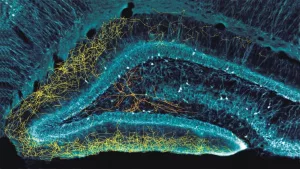

Hippocampus Neurons

The hippocampus stores “templates” of visual experiences, allowing us to recognize a familiar scene even when lighting or perspective shifts. That’s akin to a computer’s descriptor database, where past feature vectors help match new inputs. Explore the details in the article about hippocampus neurons.

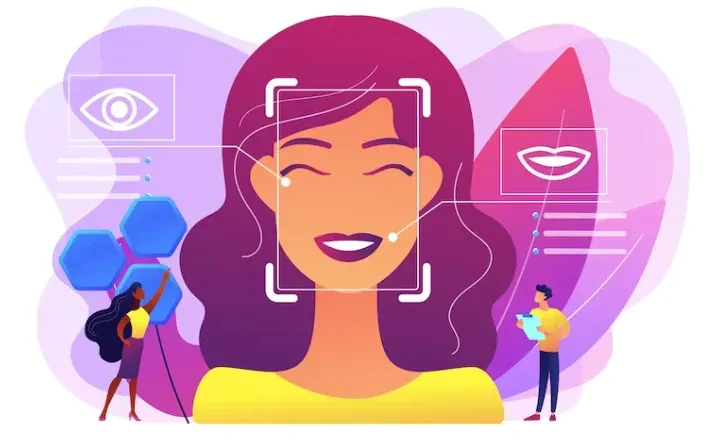

Face‑Recognition Neurons

Specialized cells in the fusiform gyrus fire selectively for faces, focusing on key facial landmarks—eyes, nose, mouth. This biological focus on landmarks aligns perfectly with how modern face‑recognition systems extract and compare facial keypoints. Dive deeper with face recognition neurons.

Social Connections Neuroscience

Our visual system also fuels social bonding; recognizing a friendly smile or a familiar gait strengthens connections. Studies in social connections neuroscience show that visual cues trigger reward pathways, highlighting why humans (and AI) care so much about accurate feature detection.

Benefits of Recognition

When visual features are recognized reliably, the world becomes a lot more convenient and safe.

- Speed & Efficiency: Real‑time object detection lets drones avoid obstacles instantly.

- Robustness Across Conditions: Good detectors work whether it’s noon, night, or a rainy day.

- Cross‑Domain Power: From diagnosing skin lesions to enabling AR filters, the same core ideas apply.

- Economic Upside: Companies save millions by automating tasks that once required manual labeling. A 2024 market report estimated a $12 billion reduction in labeling costs thanks to feature‑based pipelines.

Risks and Ethics

Every bright side casts a shadow. Let’s be honest about the pitfalls.

| Risk | Why It Matters | Mitigation |

|---|---|---|

| Privacy invasion | Facial‑recognition can track anyone in public spaces. | On‑device processing, transparent policies. |

| Bias in training data | Skewed datasets lead to unequal performance across demographics. | Diverse data collection, fairness audits. |

| Adversarial attacks | Subtle pixel changes can fool detectors, causing safety hazards. | Adversarial training, runtime anomaly detection. |

| Over‑reliance on automation | Humans may stop double‑checking critical decisions. | Human‑in‑the‑loop verification, clear fallback protocols. |

According to a 2024 IEEE review, incorporating robustness checks dramatically reduces false positives in security cameras without sacrificing speed.

Build Your Pipeline

Ready to roll up your sleeves? Here’s a step‑by‑step recipe you can try on your own laptop.

- Data Preparation: Resize images to a uniform size, convert to grayscale (if using classic detectors), and augment with flips/rotations.

- Feature Detection: Choose ORB for speed or SIFT for premium accuracy.

- Descriptor Extraction: Run the selected detector’s

detectAndComputemethod. - Feature Matching: Use BFMatcher for smaller sets or FLANN when you have millions of descriptors.

- Verification & Filtering: Apply RANSAC to discard outliers and keep only geometrically consistent matches.

- Hybrid Integration: Feed the filtered matches as additional channels into a CNN for a “best of both worlds” model.

Pro tip: If you’re targeting low‑power devices like a Raspberry Pi, stick with FAST + BRIEF; you’ll stay under 30 ms per frame.

Future Trends Ahead

What’s on the horizon? A few exciting directions that could reshape visual features recognition as we know it.

- Self‑Supervised Learning: Methods like SimCLR and MoCo let models learn robust features without labeled data, drastically cutting the need for expensive annotation.

- Neuro‑Inspired Architectures: Researchers are wiring artificial networks to mimic the interplay between the amygdala and hippocampus, aiming for faster threat detection and memory‑based recall.

- Edge‑AI: Tiny, power‑efficient chips are making on‑device feature extraction a reality, which means less data leaves your phone—good news for privacy.

- Explainable Vision: Techniques such as Grad‑CAM now let us visualize which features triggered a decision, building trust with end users.

Conclusion

Visual features recognition sits at the crossroads of computer science, neuroscience, and everyday life. By learning how edges, corners, blobs, and deep‑learned patterns work together, we gain tools that power everything from selfie filters to life‑saving medical scans. At the same time, we must steward this power responsibly—protecting privacy, fighting bias, and staying vigilant against adversarial tricks.

So, whether you’re a budding engineer, a curious hobbyist, or just someone who loves to wonder how things work, I hope this walkthrough gave you both clarity and inspiration. Try out the code snippets, explore the neuroscience links, and keep asking “what if?”—because the future of visual perception is still being written, and you have a front‑row seat.

Leave a Reply

You must be logged in to post a comment.